Machine learning is a great technology to classify and interpret huge amounts of data. For us, the most interesting part in this technology is always using data that's not visible to the human eye.

There is a lot more to the world than we are actively perceiving. When we started looking at possible projects for the ML class this summer, one topic specifically stood out: Unseen bio-data. What if you could control any device with just a few sensors placed somewhere on your body?

Background

Taking a deeper look at the human organism, there are many organic data streams that help keeping us alive and working properly. An example: Try making a fist. If you look at your lower arm, you can see your tendons moving. By using different types of sensors, you can actually track all these micro-movements and changes in blood flow, muscle activity and even capacity. Our goal was to create a signal classifier that can be trained on all these different methods of monitoring biodata and interpreting a generalized stream of data. With the right application, you could use gestures to control real or virtual prosthetics or turn any conductible surface into a touch interface.

The Problem

We are surrounded by many different invisible frequencies. These sensory signals can be used to develop human- or bio-computer-interfaces. However, interpretation is not so easy and usually not universally solved by algorithms.

First we need a sensor...

The first step towards a proof of concept prototype was to build a sensor that can track any of the mentioned data streams. We decided on using a basic ECG sensor to see if we can differentiate the number of fingers on a surface. Using an Arduino, connected to a laptop (that has to be grounded), we can generate a stream of capacity frequencies that are monitored by the sensor. As a first test surface, we used a glass bowl of water. So the goal was set: Classify if there is one finger inside the water, multiple, or even a whole hand.

... and then a software

Plotting the data stream from the Arduino revealed that there is a visible change in the graph, when touching the water. But when we tried to see any difference between the amount of fingers, the changes are getting so small, that only a neural net could differentiate between the states.

How to classify signal data - our approach

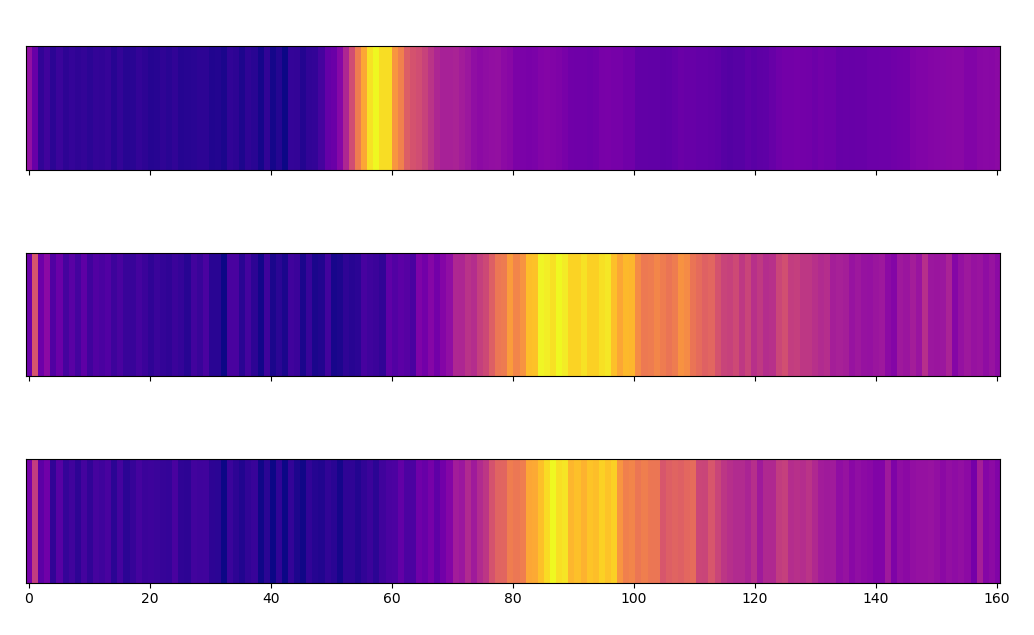

So we are getting an endless stream of frequencies and values from our sensor. The first step was to package the values into one generalized graph of 160 frequencies.

The plotted data from the arduino looked promising.

We could use two different approaches with this dataset. Use all the values as input for our network, or translate the data into something different that is maybe easier and faster to classify. After a bit of research we found a method, which gets used a lot when dealing with frequencies and timed data: Turning the raw data into images. In audio and language classification for example, you would translate the audio wavelengths into a spectrogram. This allows you to use conventional image classifiers to look at differences in the frequencies. The biggest advantage of this method is probably that you can augment the data in a way that fits your problem. Noise can be generated easily to create a more comprehensive dataset. Specific parts of the data can be enhanced and emphasized.

To generate these images we decided on a heatmap type, we used the standard python library for plotting, matplotlib.

Data with no finger inside the water.Data with one finger inside the water.

These images can now be used to train an image classifier. Luckily, we already build a pretty solid classifier from our last project*, which, after a few tweaks, turned out good enough for testing different types of images.

* How to build an image classifier with transfer learning will be another article, coming soon™.

After trying to classify the different states, it turned into a process of trial and error. You have to find the right kind of image generation to fit your problem. Also, we could not use the same trained model because the data from the sensor changed with every small difference. When trying to classify the amount of capacity that goes through your body, even wearing different shoes can make a difference.

The Result

In the end we managed to train the model a few times to successfully differentiate between one finger, multiple fingers and a whole hand. With an accuracy somewhere in the 80 percentiles, there is certainly room for improvements. While the proof of concept was achieved, the code and hardware is way too unstable to be used in any meaningful project. There a some changes, that could improve the accuracy and reusability. The sensor could be improved by adding some simple noise filters. The images can still be changed to show a more brought timeframe and depth of the data.

Learnings

Enhancing the data was a crucial part of filtering the frequencies to find relevant small changes. The project was a great way to see the impact changing small things when generating images can have. While we both had some experience using machine learning methods to solve problems, this project was mainly focused on learning how to get interesting data and use it correctly. Working with realtime data is another aspect that was new for us. When working on this project or similar ones, it would certainly help to fully understand the concept of threading beforehand 🙃.

This article is part of the class "Creating Rich User Experiences with Applied Machine Learning", held by Meghan Kane at Hochschule Darmstadt, summer term 2019.

Maximilian Brandl (@brandlmax) & Philipp Kaltofen (@p_kalt)

Take a look at the full code: Project Atlantis on Github